Target Audience

Anyone with a Claude.ai subscription

What You’ll Learn

• Generate effective prompts

• Normal vs Think mode

• Make beautiful slide decks

Tools claude.ai, Anthropic console, gamma.app, and any brand guide.

Follow along as I transform a messy YouTube transcript into a coherent Gamma slide deck using two Claude generated prompts.

Learn to generate effective prompts and when to use normal vs. thinking mode. See Gamma’s ability to take great content and brand to generate a coherent and beautiful deck.

Follow along or skip to Normal vs Thinking mode.

Step 1: Setup and YouTube Transcript

Get started:

- Signup to Claude.ai on any paid plan.

- Buy $1 Anthropic API credit, see billing.

- YouTube video page → click “Show Transcript” → copy and save, “

raw.txt“

Step 2: Clean the YouTube transcript

YouTube transcripts are notoriously messy. Since I am going to get Claude to deeply analyse the content and create a presentation with better flow, I want it cleaned somewhat before the analysis.

Here are the first 7 lines of 666 of raw-transcript.txt:

0:00

foreign [Music]

0:13

okay um yeah um so today we’re fortunate to have

0:19

Josh Tobin here who after getting his PhD from UC Berkeley and working as a

0:25

research at open AI is now the CEO and co-founder of Gantry he will

0:30

tell us about evaluating your llms for your applications

0:36

all right I’m excited to be here um unfortunately

…

Here’s my prompt to clean it up:

Here is a transcript copied from YouTube:

<raw> … </raw>

Clean <raw> so that it is easier for an LLM to comprehend. It must closely preserve original intent and meaning.

“Clean” could mean a lot of things to Claude.

Let’s get Claude to generate a better prompt:

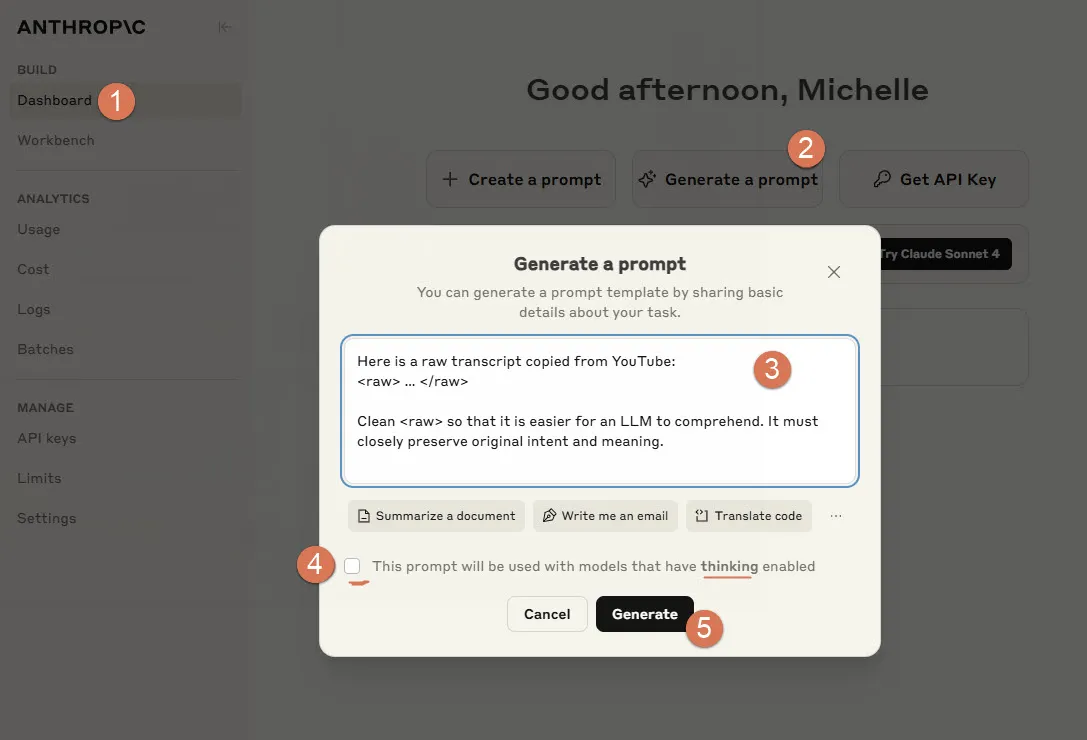

- Anthropic Console → click “Dashboard”

- Follow the 5 steps below to generate a prompt for “normal” mode

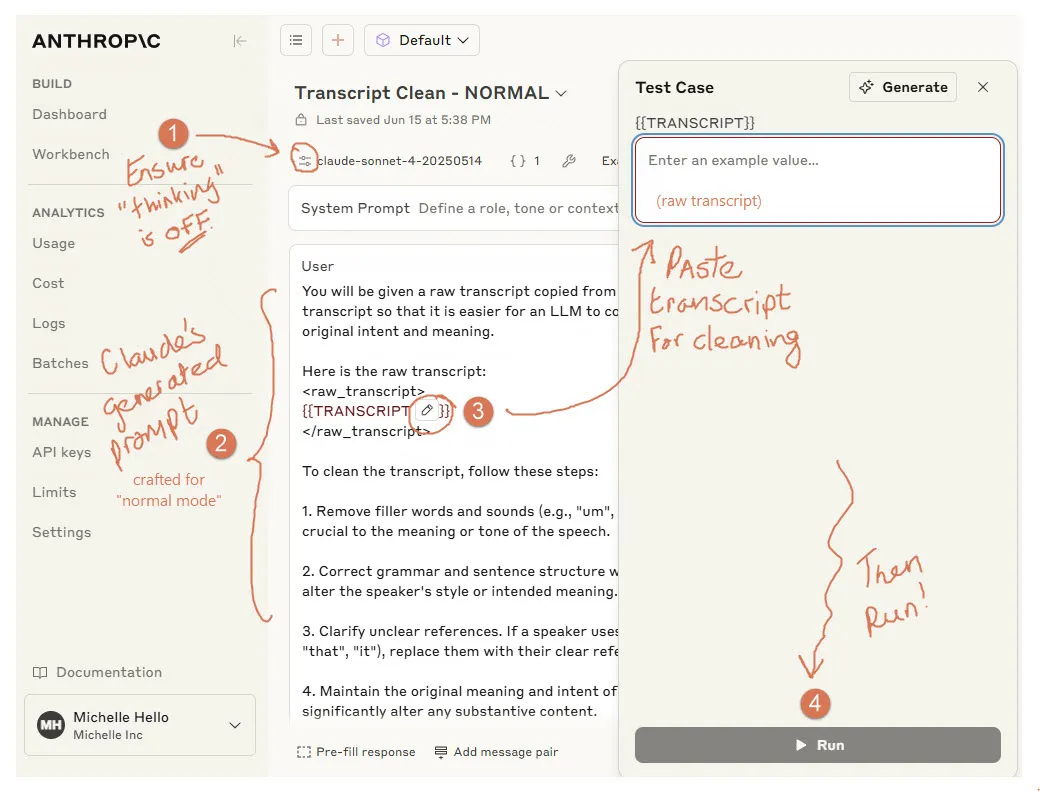

- Now run the prompt using the transcript data:

- Now repeat these steps but for “thinking” mode: check “This prompt will be used with models that have thinking enabled” in Image 1, and switch thinking on in Image 2.

What Happened?

Both generated prompts were far more explicit than my prompt (an Anthropic best pratice1 ). The output of my prompt was not at all what I needed (a long list of bullet points). There was no remarkable difference of quality of output between the generated prompts. The “thinking mode” prompt just took longer and cost more.

For powerful models like Claude 4, cleaning YouTube files does not require deep thinking and introspection. This is a task suitable for “normal mode”.

Here is the generated prompt for “normal” mode:

You will be given a raw transcript copied from YouTube. Your task is to clean this transcript so that it is easier for an LLM to comprehend while closely preserving the original intent and meaning.

Here is the raw transcript:

<raw_transcript>

{{TRANSCROPT}}

<raw_transcript>

To clean the transcript, follow these steps:

1. Remove filler words and sounds (e.g., “um”, “uh”, “like”, “you know”) unless they are crucial to the meaning or tone of the speech.

2. Correct grammar and sentence structure where necessary, but be careful not to alter the speaker’s style or intended meaning.

3. Clarify unclear references. If a speaker uses vague pronouns or deixis (e.g., “this”, “that”, “it”), replace them with their clear referents when possible.

4. Maintain the original meaning and intent of the speech. Do not add, remove, or significantly alter any substantive content.

5. Preserve important pauses or emphasis that contribute to the meaning. You can use ellipses (…) for significant pauses or ALL CAPS for emphasized words if necessary.

6. Break up long, run-on sentences into shorter, clearer ones when it improves readability without changing the meaning.

7. Correct any obvious transcription errors (e.g., homophones or misheard words) based on context.

After cleaning the transcript, output your result within tags.

Remember, your primary goal is to make the transcript easier for an LLM to comprehend while closely preserving the original intent and meaning. Do not embellish or interpret beyond what is necessary for clarity.

Winning Response (quality + cost) — cleaned transcript (normal mode):

<cleaned_transcript>

Today we’re fortunate to have Josh Tobin here who, after getting his PhD from UC Berkeley and working as a research scientist at OpenAI, is now the CEO and co-founder of Gantry. He will tell us about evaluating your LLMs for your applications.

I’m excited to be here. Unfortunately I have not been lucky enough to attend much of the conference so far, but I’ve heard a rumor that it’s been all about hype around LLMs. Is that true? Yes, okay. So how many are buying into the hype at this point? That is a good chunk. And how many are still skeptical? Okay, also a healthy chunk.

…

</cleaned_transcript>

A quick comparison of the prompts and their outputs:

| Which Prompt? | Comparision |

|---|---|

| My Prompt | Prompt: Very simple without detail Output: Fail – concise point-form summary |

| Generated (normal mode) | Prompt: Substantially more relevant instruction than mine Output: Great: flowing prose, closely follows YouTube |

| Generated (think mode) | Compared to Norm Prompt: More structured, detailed, prescriptive rules Output: Minor stylistic improvements rephrasing |

Step 3: Topics and Flow

Now with the cleaned transcript (30% less tokens than the original), I need to get Claude to identify the main topics. And then to organise them in a coherent story-telling flow for my Gamma slide-deck presentation:

Here is my prompt:

(to be completed)

[etc.]

Step 4: Creating a Gamma Deck

This is the fun part, we now have the content analysed and ready and we have a brand guide we’re going to replicate. Gamma is going to love this for slide-deck generation.

(tbc)

Appendix: Normal vs Thinking Mode

To get remarkably improved output, there is an important difference between crafting prompts for “Normal” mode versus when you know you are going to need “Thinking” mode.

But when do you need thinking mode?

(tbc)

How do you prompt differently for thinking?

(tbc)